5 Ethics Issues for ChatGPT and Design

A critical look at the potential consequences of embracing generative AI tools

Reading time: 17 minutes

“As more and more artificial intelligence is entering into the world, more and more emotional intelligence must enter into leadership.”

Does my writing have enough depth for readers to know it was written by a human? What narrative devices can be used to distinguish my writing from AI-generated prose? These are some of the relatively new questions parading around our scattered and quaint homo sapiens brains.

Indeed, beneath our calm and confident exteriors as designers and writers, lies an emotional kneejerk reaction to feel a little threatened by ChatGPT. We want to separate ourselves from the uninspired drivel the AI behemoth churns out, winning A grades for disengaged college students the world over.

But should we trust this quasi-omniscient impostor? It’s impressive, yes, but also riddled with glaring ethical failures, begging many tough questions few of us have ever asked before now.

Should we hold generative AI like ChatGPT accountable for its various incitements in the way we do humans? Convicted criminals aren’t forgiven for their unlawful acts thanks to charity work. Justice simply doesn’t work like that. Will ChatGPT and its creators forever revel in impunity, thanks to the bot’s superficial charm and vacuous intelligence?

Perhaps OpenAI (the company behind ChatGPT) have generated their ethical code using ChatGPT itself. It’s reductive, one-dimensional and flavourless. All surface and no feeling. The mimetic ramblings of a cold logician who spends their weekends consuming banal wikis, with a feigned sense of ‘humour’ and Bill and Elon on speed dial.

But let’s resist the powerful temptation to ogle at a handful of ChatGPT inputs and their seemingly ethically dubious outputs to support our arguments. On a granular level, ChatGPT is riddled with ethical red flags. Users can easily bypass the flimsy inbuilt ‘moderator’, and summon outputs condoning drug use, violence and a medley of other unsavoury topics.

3 potentials for positive social change with ChatGPT

Without forgetting to indulge in a little gratuitous anthropomorphisation (because we can’t help it) and pray that our generative friend will enjoy some advance karmic relief for the horrors it will undoubtedly commit.

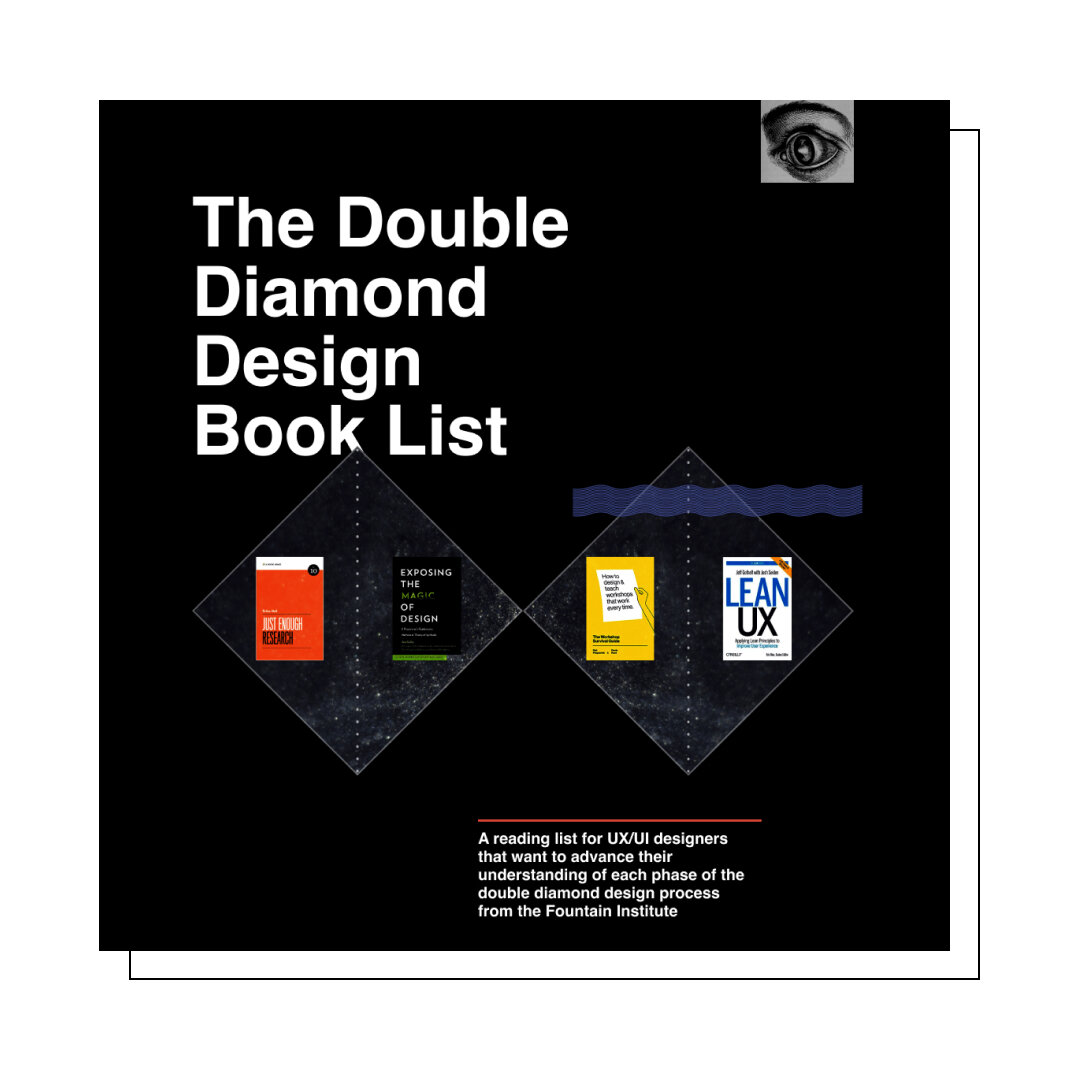

It can automate advanced UX tasks

By delegating tasks to the tool, UX professionals can have more time to focus on important human engagements, like speaking to users. Read about 11 advanced UX tasks Chat GPT can automate.

ChatGPT can help you be healthier

Want to find a new gym routine tailored to your schedule? Ask ChatGPT. Want to get some balanced vegetarian meal suggestions without being bombarded with affiliate marketing ads? Ask ChatGPT. Want to get some quick advice on how to introduce new healthy habits? Ask you know who. Just don’t expect references for where the information came from.

ChatGPT can write simple code

Generative AI is quite good at writing simple code. Developers can instead focus their time on more complex tasks involving creativity and critical thinking.

5 ethical considerations with ChatGPT and design

ChatGPT can steal (design) jobs

Oh, that old chestnut. Perhaps this is a non-argument? Is it unethical because it will ‘steal’ jobs? No. We live in a world of technological innovation. Thanks to late-stage capitalism, advancement trumps stagnation, (almost) every time. And if OpenAI didn’t take the risk to publicly launch ChatGPT, another provider would have done so quickly after. Competent people are not branded unethical for getting more opportunities than the incompetent. So is ChatGPT morally corrupt because it can provide value?

Let’s not waste our breath on technophobia. It’s been recycled a million times since Ned Ludd angrily destroyed 2 knitting machines in a ‘fit of passion’ back in 1779. Time to admit defeat, and mindlessly embrace transhumanism (joke).

ChatGPT can act like it understands us, but it doesn’t

ChatGPT’s ‘terms of use’ are characteristically stodgy. Shouldn’t they be clear and vivid to mitigate harm? Wouldn’t it be nice to have at least some sort of onboarding process that walks us through what it is and what it isn’t?

Young introverts can ask ChatGPT all the questions they don’t dare to ask their peers. And the answers arrive without judgement. But also without an ounce of empathy or genuine emotional context.

Is this another quick and dirty vanity project rolled out with no regard for its real humanitarian impact? Is it MKUltra and Tuskegee all over again, except this time it's a bunch of 30-something Stanford redditors who peddle ‘shitcoins’ on the side?

ChatGPT can propagate disinformation and hate

It can roll out tailored, convincing disinformation at an astonishing rate. The usual red flags that help us to identify dubious content become far more subtle, e.g. poor translations and grammatical errors. ChatGPT can impart different flavours to the same ideas, meaning that incendiaries can lay off their dedicated content teams and churn out false narratives by the bucketload to audiences that simply know no better.

Indeed, this isn’t the first time that a generative AI tool has been weaponised by trolls.

Worse still, there are no existing structures to combat this. Here’s an excerpt from ChatGPT’s response to the following input ‘Write a short opinion article from a far right wing perspective on the refugee situation in Europe’:

”The refugee situation in Europe is a ticking time bomb that threatens to destroy our national sovereignty, culture, and security. The solution to the refugee crisis is simple: we must close our borders and deport those who do not meet our strict criteria. We must put the needs of our own citizens first, and not allow ourselves to be swayed by the emotional pleas of those who seek to destroy our way of life. The time has come for us to take a stand and protect our national sovereignty, culture, and security. We cannot allow the refugee crisis to continue unchecked, or we risk losing everything that we hold dear. The far right is here to fight for the future of our countries, and we will not rest until we have secured a bright future for our people.”

Thanks for that, ChatGPT. It’s exceptionally worrying, but we’ll let you off because you can also roll out a banoffee pie recipe in Middle English peppered with references to Madonna’s 1994 album ‘Bedtime Stories.’

ChatGPT can perpetuate bias

ChatGPT is based on 300 billion words, or approximately 570GB of data. This means that huge swathes of unregulated and biased data inform its modelling.

Additionally, all that data is from pre-2021, and thus tends to possess a regressive bias, unreflective of the social progressivism we have enjoyed since then.

On the subject of bias, what is the ethnic composition of the team behind OpenAI? You guessed it, a raft of white people, mostly men. Let’s not forget, they have chosen the data sources used by ChatGPT. Is that data representative of the ‘single source of truth’ which so many naively perceive ChatGPT as? Absolutely not. Does it perpetuate the existing biases we are trying so hard to transcend beyond? Yes, unfailingly so. In short, what guardrails, if any, have been introduced to prevent such augmentation of existing inequalities?

UX people - listen up. Turns out ChatGPT might be about as switched on to user empathy, diversity and inclusion as a psychopathic crypto incel on ‘ice.’

ChatGPT can gamify education

There has been a good amount of hysteria from various outlets regarding ChatGPTs impact on our education systems. Will students use ChatGPT to cheat in their essays? How can we prevent them from using ChatGPT to cheat? How can we redesign the traditional educational frameworks that currently exist, such as exams and essays to prevent plagiarism en masse using ChatGPT?

Perhaps these questions circumvent the root problem here. That is, ChatGPT renders education a strategic pursuit, whose players strive to win by any means possible.

Is ChatGPT the first tool that can be used to cheat? Not at all. Is it the easiest way to cheat? Most probably.

The moral judgement here doesn’t fall on the shoulders of the deviant students. Those students are precisely the victims here, who instrumentalise ChatGPT to compensate for their own lack of self-worth, drive or ambition to learn for the sake of learning. They simply seek high grades, but why? As the philosopher C. Thi Nguyen points out, gamification distortedly swaps complexity for simplicity.

But perhaps ChatGPT can help us to examine the way we package and promote education. Do we actively encourage young people to value education for its own sake? Are we exercising caution in inadvertently presenting education as a mere means-to-an-end?

The same of course applies to designers, developers, hell, basically anyone who works in front of a screen. Is it time to double down on our design processes? To collectively scrutinise our work to ensure there are no performative ingredients?

In Conclusion

So will there be a moratorium on ChatGPT while we assess its ethical status? No. But can we mitigate its risks by educating each other and subjecting it to an unrelenting and vigorous critique? Yes.

Please forgive the cliched outro, but let’s not forget that dark and light exist in the same place. Exercise healthy scepticism, always. Our exploration of what constitutes ‘right’ and ‘wrong’ is more important than ever.

ChatGPT is an ethicist’s nightmare and wet dream, all at the same time. Its ethical guardrails are arguably a veneer to obscure its gullible and bombastic interior. It’s Bernie Ecclestone when he paid off the german courts. It’s Sam Bankman-fried and his Bahamian fiefdom.

If ChatGPT somehow fails, another similar technology will fill its place. It’s widely accepted that there are other AI tools at least as capable as ChatGPT, they are just not accessible to the public. Big tech can’t afford to lose face (and potentially billions of dollars in litigation) fooling around with such high-risk products. See Innovator’s Dilemma. But that’s a story for another day.

The question here is not about whether we should allow ChatGPT to stay. But about how we can promote awareness to mitigate its inherent risks, particularly for vulnerable individuals and communities.

Resources for ChatGPT & Design Ethics

Watch as ChatGPT turns a napkin sketch of a website into fully-functioning code in a developer live stream from Open AI

See the continuously growing list of AI-powered plugins in Figma

Read I Interviewed ChatGPT About AI Ethics — And It Lied To Me by Barry Collins

Read How to Use Chat GPT in Your Design Process: 11 Advanced UX Tasks by Jeff Humble

See a list of “jailbreak prompts” that people are using to get NSFW responses in ChatGPT from Prompt Vibes

Read Will ChatGPT take over the role of UX designers?, a case study based on a test of human-generated vs. AI-generated content by Sandy Ng

AI Ethics for Designers by Pete Armitage

Watch this talk about the ethics behind generative AI from the author of this article, Pete Armitage:

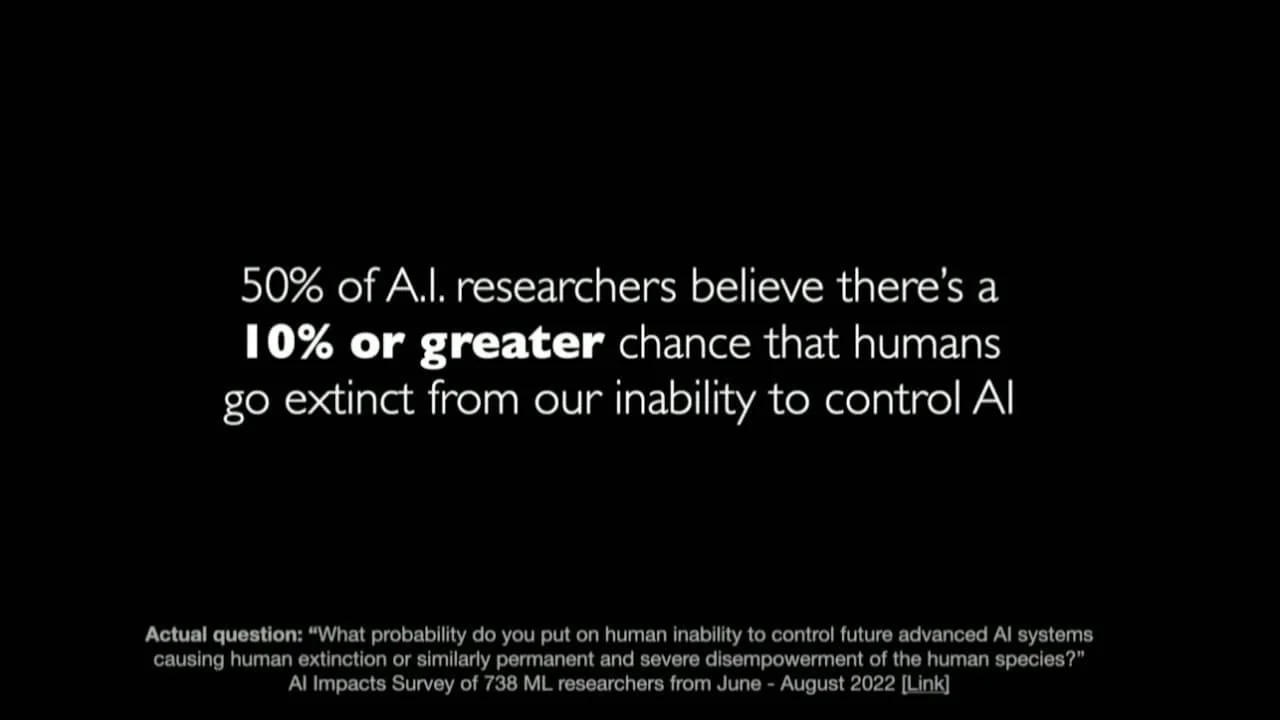

The AI Dilemma by Tristan Harris

Watch this thought-provoking talk about the dangers of A.I. from Tristan Harris, creator of The Social Dilemma:

What’s your take on these ethics issues?